Principal Components and Linear Discriminant Analyses with Methyl-IT

A Methyl-IT user

guide

A Methyl-IT user guide

Department of Biology and Plant Science.

Pennsylvania State University, University Park, PA 16802

Maintainer: Robersy Sanchez

Pennsylvania State University, University Park, PA 16802

Maintainer: Robersy Sanchez

15 January 2024

Source:vignettes/pca_lda_with_methylit.Rmd

pca_lda_with_methylit.RmdAbstract

When methylation analysis is intended for diagnostic/prognostic purposes, for example, in clinical applications for patient diagnostics, to know whether the patient would be in healthy or disease stage we would like to have a good predictor tool in our side. It turns out that classical machine learning (ML) tools like hierarchical clustering, principal components and linear discriminant analysis can help us to reach such a goal. The current Methyl-IT downstream analysis is equipped with the mentioned ML tools.

Background

The discrimination of the methylation signal from the stochastic methylation background resultant from the standard (non-stressful) biological processes is a critical step for the genome-wide methylation analysis. Such a discrimination requires for the knowledge of the probability distribution of the information divergence of methylation levels and a proper evaluation of the classification performance of differentially methylated positions (DMPs) into two classes: DMPs from control and DMPs from treatment.

Once a methylation analysis is performed to detect the genome-wide DMPs, a natural next step is to investigate whether the detected DMPs carries enough discriminatory power to discern between individual groups. Then, we must move from the methylation analysis to statistical/machine learning field (multivariate statistics). To reach our goal, each individual can be represented as a vector from the \(N\)-dimensional space of DMPs, where DMPs can be expressed in terms of some suitable measurement as function of the methylation levels. These suitable measurement are information divergences, such as total variation distance (\(TV_d\), the absolute value of methylation levels) and Hellinger divergence (\(H\)). It is worthy to observe that methylation levels are uncertainty measurements and, therefore, they do not carry information per se. Information is only carried on measurements expressing uncertainty variations like \(TV_d\), \(H\), Jensen-Shannon Divergence, and in general, the so called information theoretical divergences (1).

The \(N\)-dimensional space of DMPs is, usually, too big. So, a first dimension reduction is required. This can be done by splitting the chromosomes into non-overlapping genomic region and next computing some statistic like the sum or mean of some information divergences of methylation levels at DMPs inside of each region. Still we will have to deal with a multidimensional space, but acceptable for a meaningful application of hierarchical clustering (HC), principal component analysis (PCA) and linear discriminant analysis (LDA). In the current examples, Methyl-IT methylation analysis will be applied to a dataset of simulated samples to detect DMPs on then. Next, after space dimension reduction, the mentioned machine learning approached will be applied.

Data generation

For the current example on methylation analysis with Methyl-IT we will use simulated data. Read-count matrices of methylated and unmethylated cytosine are generated with MethylIT.utils function simulateCounts. A basic example generating datasets is given in the web page: Methylation analysis with Methyl-IT

library(MethylIT)

library(MethylIT.utils)

library(ggplot2)

library(ape)

alpha.ct <- 0.019

alpha.g1 <- 0.022

alpha.g2 <- 0.025

# The number of cytosine sites to generate

sites = 1.5e5

# Set a seed for pseudo-random number generation

set.seed(124)

control.nam <- c("C1", "C2", "C3", "C4", "C5")

treatment.nam1 <- c("T1", "T2", "T3", "T4", "T5")

treatment.nam2 <- c("T6", "T7", "T8", "T9", "T10")

# Reference group

ref0 = simulateCounts(num.samples = 3, sites = sites, alpha = alpha.ct,

beta = 0.5, size = 50, theta = 4.5,

sample.ids = c("R1", "R2", "R3"))

# Control group

ctrl = simulateCounts(num.samples = 5, sites = sites, alpha = alpha.ct,

beta = 0.5, size = 50, theta = 4.5,

sample.ids = control.nam)

# Treatment group II

treat = simulateCounts(num.samples = 5, sites = sites, alpha = alpha.g1,

beta = 0.5, size = 50, theta = 4.5,

sample.ids = treatment.nam1)

# Treatment group II

treat2 = simulateCounts(num.samples = 5, sites = sites, alpha = alpha.g2,

beta = 0.5, size = 50, theta = 4.5,

sample.ids = treatment.nam2)A reference sample (virtual individual) is created using individual samples from the control population using function poolFromGRlist. The reference sample is further used to compute the information divergences of methylation levels, \(TV_d\) and \(H\), with function estimateDivergence. This is a first fundamental step to remove the background noise (fluctuations) generated by the inherent stochasticity of the molecular processes in the cells.

# === Methylation level divergences ===

# Reference sample

ref = poolFromGRlist(ref0, stat = "mean", num.cores = 4L, verbose = FALSE)

divs <- estimateDivergence(

ref = ref,

indiv = c(ctrl, treat, treat2),

Bayesian = TRUE,

and.min.cov = FALSE,

num.cores = 6L,

percentile = 1,

verbose = FALSE)

# To remove hd == 0 to estimate. The methylation signal only is given for

divs = lapply(divs, function(div) div[ abs(div$hdiv) > 0 ], keep.attr = TRUE)

names(divs) <- names(divs)

divs## InfDiv object of length: 15

## -------

## $C1

## GRanges object with 26720 ranges and 9 metadata columns:

## seqnames ranges strand | c1 t1 c2 t2

## <Rle> <IRanges> <Rle> | <numeric> <numeric> <numeric> <numeric>

## [1] 1 7 * | 2 42 6 38

## [2] 1 12 * | 23 62 0 85

## [3] 1 19 * | 12 27 0 39

## [4] 1 22 * | 13 27 0 40

## [5] 1 31 * | 58 49 80 27

## ... ... ... ... . ... ... ... ...

## [26716] 1 149977 * | 11 35 34 12

## [26717] 1 149979 * | 16 34 0 50

## [26718] 1 149990 * | 24 54 0 78

## [26719] 1 149994 * | 25 51 76 0

## [26720] 1 149995 * | 16 63 0 79

## p1 p2 TV bay.TV hdiv

## <numeric> <numeric> <numeric> <numeric> <numeric>

## [1] 0.0721422 0.13768432 0.0909091 0.0655421 0.526471

## [2] 0.2659280 0.00327293 -0.2705882 -0.2626551 19.800862

## [3] 0.2920976 0.00697775 -0.3076923 -0.2851199 9.313997

## [4] 0.3068138 0.00681017 -0.3250000 -0.3000036 10.213202

## [5] 0.5191743 0.73900460 0.2056075 0.2198303 5.688769

## ... ... ... ... ... ...

## [26716] 0.235823 0.71967696 0.500000 0.483854 11.7687

## [26717] 0.305641 0.00549133 -0.320000 -0.300150 13.0602

## [26718] 0.299161 0.00356061 -0.307692 -0.295601 20.8075

## [26719] 0.318165 0.98256166 0.671053 0.664396 51.1029

## [26720] 0.203882 0.00351646 -0.202532 -0.200366 13.2064

## -------

## seqinfo: 1 sequence from an unspecified genome; no seqlengths

##

## ...

## <14 more GRanges elements>

## -------To get some statistical description about the sample is useful. Here, empirical critical values for the probability distribution of \(H\) and \(TV\) can is obtained using quantile function from the R package stats.

critical.val <- do.call(rbind, lapply(divs, function(x) {

x <- x[x$hdiv > 0]

hd.95 = quantile(x$hdiv, 0.95)

tv.95 = quantile(abs(x$bay.TV), 0.95)

return(c(tv = tv.95, hd = hd.95))

}))

critical.val## tv.95% hd.95%

## C1 0.6523406 62.26763

## C2 0.6500765 60.89674

## C3 0.6508274 61.48960

## C4 0.4858965 56.63342

## C5 0.4970348 58.16023

## T1 0.9236731 126.34191

## T2 0.9136241 120.45367

## T3 0.9137810 121.99373

## T4 0.9192143 124.54298

## T5 0.9225918 124.25236

## T6 0.9447922 132.69308

## T7 0.9447348 134.88441

## T8 0.9408379 131.80496

## T9 0.9482600 134.68997

## T10 0.9456255 133.87163Modeling the methylation signal

Here, the methylation signal is expressed in terms of Hellinger divergence of methylation levels. Here, the signal distribution is modelled by a Weibull probability distribution model. Basically, the model could be a member of the generalized gamma distribution family. For example, it could be gamma distribution, Weibull, or log-normal. To describe the signal, we may prefer a model with a cross-validations: R.Cross.val > 0.95. Cross-validations for the nonlinear regressions are performed in each methylome as described in (2). The non-linear fit is performed through the function nonlinearFitDist

The best model excluding “GGamma3P” model, for which the computation is computationally expensive:

d <- c("Weibull2P", "Weibull3P", "Gamma2P", "Gamma3P")

gof <- gofReport(HD = divs, column = 9L, model = d, num.cores = 6L,

output = "all", verbose = FALSE)

gof$bestModel## C1 C2 C3 C4 C5 T1

## "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P"

## T2 T3 T4 T5 T6 T7

## "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P" "Weibull2P"

## T8 T9 T10

## "Weibull2P" "Weibull2P" "Weibull2P"Although we did not included the main member of the generalized gamma distribution family (“GGamma3P”), the Cross-validations correlation coefficient w3p_R.Cross.val values above 0.99 gives us some peace of mind on our confidence on the model prediction power/performance when confronted with external data sets (AIC and BIC are not conceived to provide such an information).

gof$stats## w2p_AIC w2p_R.Cross.val w3p_AIC w3p_R.Cross.val g2p_AIC g2p_R.Cross.val

## C1 -172642.2 0.9994640 Inf 0 -164820.4 0.9993928

## C2 -175595.0 0.9995131 Inf 0 -167532.7 0.9994499

## C3 -177238.5 0.9995420 Inf 0 -169774.2 0.9994861

## C4 -219212.7 0.9994948 Inf 0 -206296.0 0.9993131

## C5 -217448.4 0.9994364 Inf 0 -205264.1 0.9992280

## T1 -168080.2 0.9972701 Inf 0 -160888.3 0.9963827

## T2 -167285.4 0.9972727 Inf 0 -160748.1 0.9964683

## T3 -170302.3 0.9974340 Inf 0 -163998.4 0.9966883

## T4 -168551.4 0.9973219 Inf 0 -162169.8 0.9965314

## T5 -167122.8 0.9972266 Inf 0 -160694.8 0.9964093

## T6 -173245.9 0.9971878 Inf 0 -165656.8 0.9962175

## T7 -170905.5 0.9970146 Inf 0 -163342.2 0.9959969

## T8 -171372.8 0.9970757 Inf 0 -163649.6 0.9960768

## T9 -170097.3 0.9969613 Inf 0 -162250.6 0.9958859

## T10 -171267.1 0.9970284 Inf 0 -163857.5 0.9960168

## g3p_AIC g3p_R.Cross.val bestModel

## C1 Inf 0 w2p

## C2 Inf 0 w2p

## C3 Inf 0 w2p

## C4 Inf 0 w2p

## C5 Inf 0 w2p

## T1 Inf 0 w2p

## T2 Inf 0 w2p

## T3 Inf 0 w2p

## T4 Inf 0 w2p

## T5 Inf 0 w2p

## T6 Inf 0 w2p

## T7 Inf 0 w2p

## T8 Inf 0 w2p

## T9 Inf 0 w2p

## T10 Inf 0 w2pEstimation of an optimal cutpoint to discriminate the signal induced by the treatment

The above statistical description of the dataset (evidently) suggests that there two main groups: control and treatments, while treatment group would split into two subgroups of samples. In the current case, to search for a good cutpoint, we do not need to use all the samples. The critical value \(H_{\alpha=0.05}=33.51223\) suggests that any optimal cutpoint for the subset of samples T1 to T5 will be optimal for the samples T6 to T10 as well.

Below, we are letting the PCA+LDA model classifier to take the decision on whether a differentially methylated cytosine position is a treatment DMP. To do it, Methyl-IT function getPotentialDIMP is used to get methylation signal probabilities of the observed \(H\) values for all cytosine site (alpha = 1), in accordance with the 2-parameter Weibull distribution model. Next, this information will be used to identify DMPs using Methyl-IT function estimateCutPoint. Cytosine positions with \(H\) values above the cutpoint are considered DMPs. Finally, a PCA + QDA model classifier will be fitted to classify DMPs into two classes: DMPs from control and those from treatment.

dmps <- getPotentialDIMP(LR = divs,

nlms = gof$nlms,

div.col = 9L,

tv.cut = 0.461,

tv.col = 8,

alpha = 0.05,

dist.name = gof$bestModel)

cutp = estimateCutPoint(LR = dmps,

simple = FALSE,

column = c(hdiv = TRUE, TV = TRUE,

wprob = TRUE, pos = TRUE),

classifier1 = "pca.lda",

classifier2 = "pca.qda",

control.names = control.nam,

treatment.names = treatment.nam1,

post.cut = 0.5,

cut.values = seq(15, 38, 1),

clas.perf = TRUE,

prop = 0.6,

center = FALSE,

scale = FALSE,

n.pc = 4,

div.col = 9L,

stat = 0)

cutp## Cutpoint estimation with 'pca.lda' classifier

## Cutpoint search performed using model posterior probabilities

##

## Posterior probability used to get the cutpoint = 0.5

## Cytosine sites with treatment PostProbCut >= 0.5 have a

## divergence value >= 78.68617

##

## Optimized statistic: Accuracy = 1

## Cutpoint = 78.686

##

## Model classifier 'pca.qda'

##

## The accessible objects in the output list are:

## Length Class Mode

## cutpoint 1 -none- numeric

## testSetPerformance 6 confusionMatrix list

## testSetModel.FDR 1 -none- numeric

## model 5 pcaQDA list

## modelConfMatrix 6 confusionMatrix list

## initModel 1 -none- character

## postProbCut 1 -none- numeric

## postCut 1 -none- numeric

## classifier 1 -none- character

## statistic 1 -none- character

## optStatVal 1 -none- numeric

## cutpData 1 -none- logicalIn this case, the cutpoint \(H_{cutpoint}=92.138\) is lower from what is expected from the higher treatment empirical \(H_{\alpha = 0.05}^{TT_{Emp}}=120.45119\) critical value: \(H_{cutpoint} < H_{\alpha = 0.05}^{TT_{Emp}}\).

The model performance in in the test dataset is:

cutp$testSetPerformance## Confusion Matrix and Statistics

##

## Reference

## Prediction CT TT

## CT 2579 0

## TT 0 6418

##

## Accuracy : 1

## 95% CI : (0.9996, 1)

## No Information Rate : 0.7133

## P-Value [Acc > NIR] : < 2.2e-16

##

## Kappa : 1

##

## Mcnemar's Test P-Value : NA

##

## Sensitivity : 1.0000

## Specificity : 1.0000

## Pos Pred Value : 1.0000

## Neg Pred Value : 1.0000

## Prevalence : 0.7133

## Detection Rate : 0.7133

## Detection Prevalence : 0.7133

## Balanced Accuracy : 1.0000

##

## 'Positive' Class : TT

## Representing individual samples as vectors from the N-dimensional space

The above cutpoint can be used to identify DMPs from control and

treatment. The PCA+QDA model classifier can be used any time to

discriminate control DMPs from those treatment. DMPs are retrieved using

selectDIMP

function (selectDIMP):

dmps <- selectDIMP(LR = divs, div.col = 9L, cutpoint = cutp$cutpoint,

tv.cut = 0.34, tv.col = 7)

sapply(dmps,length)## C1 C2 C3 C4 C5 T1 T2 T3 T4 T5 T6 T7 T8 T9 T10

## 704 659 644 789 861 3403 3244 3234 3308 3328 3826 3888 3840 3957 3875Next, to represent individual samples as vectors from the N-dimensional space, we can use getGRangesStat . Here, the simulated “chromosome” is split into regions of 350bp non-overlapping windows. and the density of Hellinger divergences values is taken for each windows.

ns <- names(dmps)

DMRs <- getGRangesStat(GR = dmps, win.size = 350, step.size = 350,

stat = "density", column = 9L, scaling = 1)

names(DMRs) <- ns

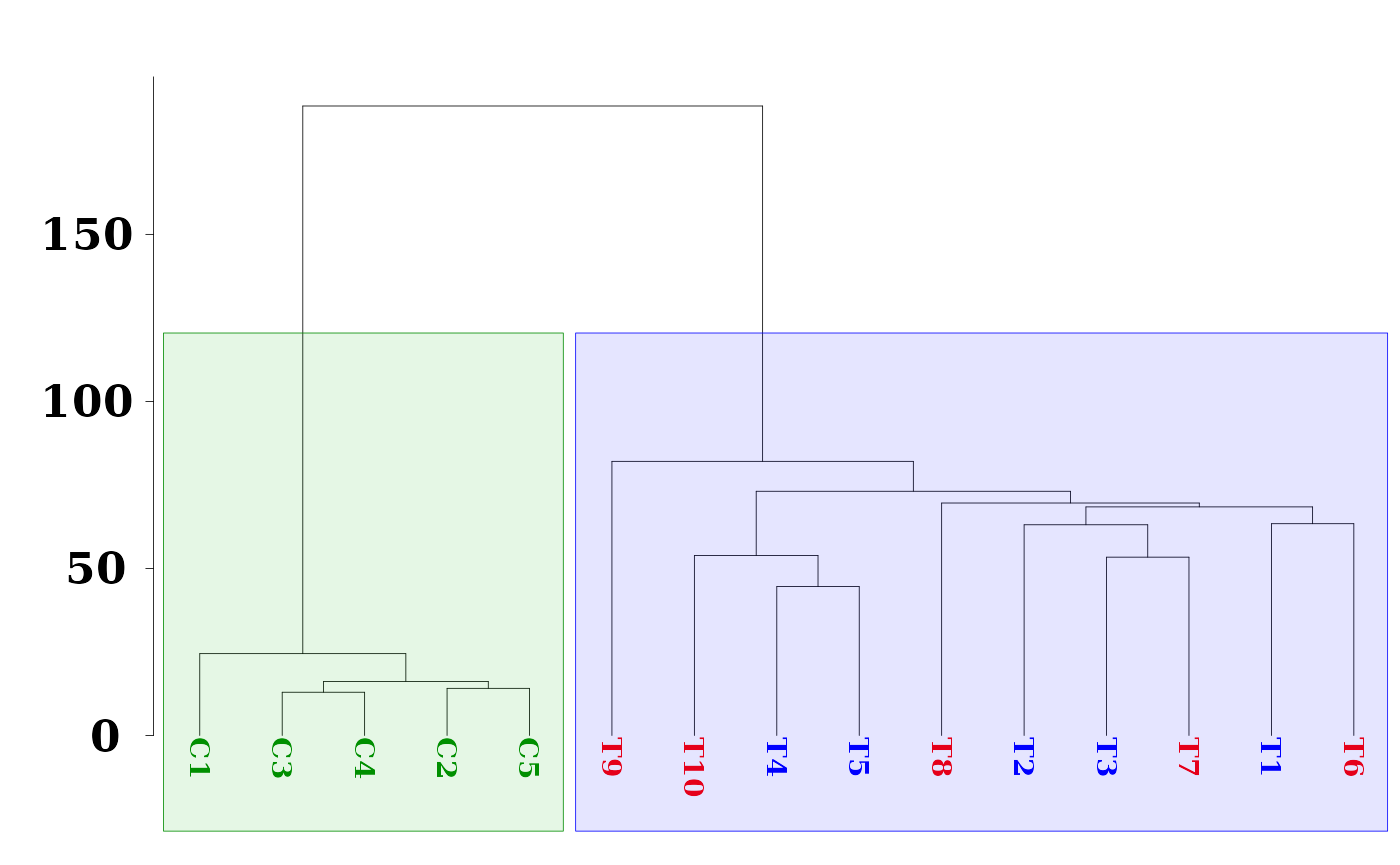

as(DMRs, "GRangesList")Hierarchical Clustering

Hierarchical clustering (HC) is an unsupervised machine learning approach. HC can provide an initial estimation of the number of possible groups and information on their members. However, the effectiveness of HC will depend on the experimental dataset, the metric used, and the agglomeration algorithm applied. For an unknown reason (and based on the personal experience of the author working in numerical taxonomy), Ward’s agglomeration algorithm performs much better on biological experimental datasets than the rest of the available algorithms like UPGMA, UPGMC, etc.

dmgm <- uniqueGRanges(DMRs, verbose = FALSE)

dmgm <- t(as.matrix(mcols(dmgm)))

rownames(dmgm) <- ns

sampleNames <- ns

hc = hclust(dist(data.frame( dmgm ))^2, method = 'ward.D2')

hc.rsq = hc

hc.rsq$height <- sqrt( hc$height )Dendrogram

colors = sampleNames

colors[grep("C", colors)] <- "green4"

colors[grep("T[6-9]{1}", colors)] <- "red"

colors[grep("T10", colors)] <- "red"

colors[grep("T[1-5]", colors)] <- "blue"

# rgb(red, green, blue, alpha, names = NULL, maxColorValue = 1)

clusters.color = c(rgb(0, 0.7, 0, 0.1), rgb(0, 0, 1, 0.1), rgb(1, 0.2, 0, 0.1))

par(font.lab=2,font=3,font.axis=2, mar=c(0,4,2,0), family="serif" , lwd = 0.4)

plot(as.phylo(hc.rsq), tip.color = colors, label.offset = 0.5, font = 2, cex = 0.9,

edge.width = 0.4, direction = "downwards", no.margin = FALSE,

align.tip.label = TRUE, adj = 0)

axisPhylo( 2, las = 1, lwd = 0.4, cex.axis = 1.4, hadj = 0.8, tck = -0.01 )

## cuts = c(xleft, ybottom, xright, ytop)

hclust_rect(hc.rsq, k = 2L, border = c("green4", "blue", "red"),

color = clusters.color, cuts = c(0.56, 1.4, 0.41, 120))

Here, we have use function as.phylo from the R package ape for better dendrogram visualization and function hclust_rect from MethylIT.utils R package to draw rectangles with background colors around the branches of a dendrogram highlighting the corresponding clusters.

PCA + LDA

MethylIT function pcaLDA will be used to perform the PCA and PCA + LDA analyses. The function returns a list of two objects: 1) ‘lda’: an object of class ‘lda’ from package ‘MASS’. 2) ‘pca’: an object of class ‘prcomp’ from package ‘stats’. For information on how to use these objects see ?lda and ?prcomp.

Unlike hierarchical clustering (HC), LDA is a supervised machine learning approach. So, we must provide a prior classification of the individuals, which can be derived, for example, from the HC, or from a previous exploratory analysis with PCA.

# A prior classification derived from HC

grps <- cutree(hc, k = 3)

grps[grep(1, grps)] <- "CT"

grps[grep(2, grps)] <- "T1"

grps[grep(3, grps)] <- "T2"

grps <- factor(grps)

ld <- pcaLDA(data = data.frame(dmgm), grouping = grps, n.pc = 3, max.pc = 3,

scale = FALSE, center = FALSE, tol = 1e-6)

summary(ld$pca)## Importance of first k=3 (out of 15) components:

## PC1 PC2 PC3

## Standard deviation 221.4347 35.6409 29.79811

## Proportion of Variance 0.9032 0.0234 0.01636

## Cumulative Proportion 0.9032 0.9266 0.94300We may retain enough components so that the cumulative percent of variance accounted for at least 70 to 80%. By setting \(scale=TRUE\) and \(center=TRUE\), we could have different results and would improve or not our results. In particular, these settings are essentials if the N-dimensional space is integrated by variables from different measurement scales/units, for example, Kg and g, or Kg and Km.

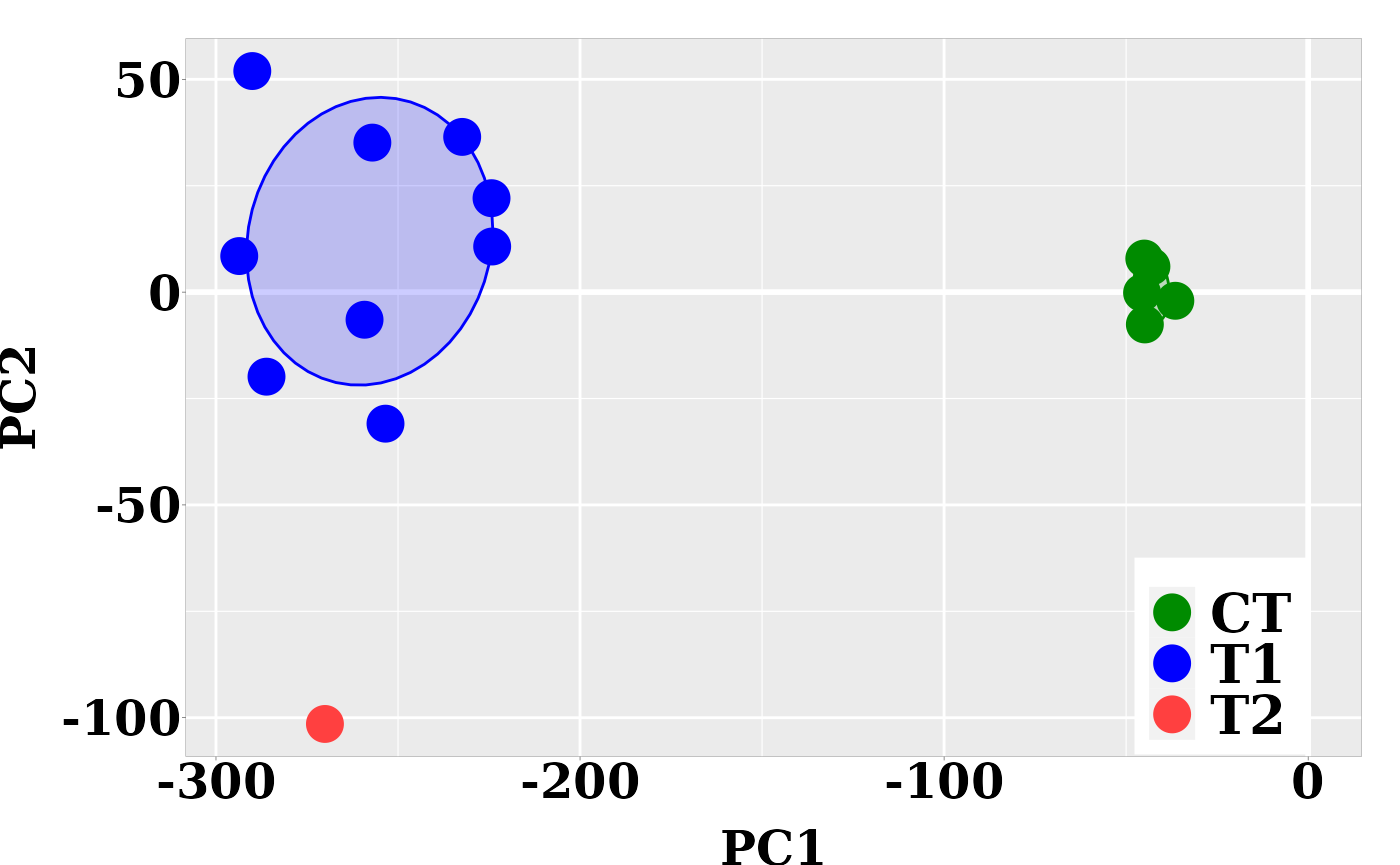

PCA

The individual coordinates in the principal components (PCs) are returned by function pcaLDA. In the current case, based on the cumulative proportion of variance, the two firsts PCs carried about the 84% of the total sample variance and could split the sample into meaningful groups.

pca.coord <- ld$pca$x

pca.coord## PC1 PC2 PC3

## C1 -45.00927 7.884912 17.39353588

## C2 -43.05409 6.015694 2.75771385

## C3 -36.48632 -2.033121 2.35944370

## C4 -44.85556 -7.591736 -0.03176889

## C5 -45.62115 -0.162804 7.90475426

## T1 -293.61151 8.474484 -4.12206109

## T2 -253.43665 -30.909978 43.28911190

## T3 -224.13477 10.719154 -11.12880291

## T4 -232.37265 36.469259 0.60205009

## T5 -224.31342 22.069410 3.54331693

## T6 -290.02667 51.944972 6.58200024

## T7 -286.12184 -19.867377 -8.32584719

## T8 -259.19317 -6.488206 -89.57270641

## T9 -270.07011 -101.465917 12.84137767

## T10 -257.04937 35.129342 41.52432128Graphic PC1 vs PC2

Next, the graphic for individual coordinates in the two firsts PCs can be easily visualized now:

dt <- data.frame(pca.coord[, 1:2], subgrp = grps)

p0 <- theme(

axis.text.x = element_text( face = "bold", size = 18, color="black",

# hjust = 0.5, vjust = 0.5,

family = "serif", angle = 0,

margin = margin(1,0,1,0, unit = "pt" )),

axis.text.y = element_text( face = "bold", size = 18, color="black",

family = "serif",

margin = margin( 0,0.1,0,0, unit = "mm" )),

axis.title.x = element_text(face = "bold", family = "serif", size = 18,

color="black", vjust = 0 ),

axis.title.y = element_text(face = "bold", family = "serif", size = 18,

color="black",

margin = margin( 0,2,0,0, unit = "mm" ) ),

legend.title=element_blank(),

legend.text = element_text(size = 20, face = "bold", family = "serif"),

legend.position = c(0.88, 0.14),

panel.border = element_rect(fill=NA, colour = "black",size=0.07),

panel.grid.minor = element_line(color= "white",size = 0.2),

axis.ticks = element_line(size = 0.1), axis.ticks.length = unit(0.5, "mm"),

plot.margin = unit(c(1,1,0,0), "lines")) ## Warning: The `size` argument of `element_line()` is deprecated as of ggplot2 3.4.0.

## ℹ Please use the `linewidth` argument instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.## Warning: The `size` argument of `element_rect()` is deprecated as of ggplot2 3.4.0.

## ℹ Please use the `linewidth` argument instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

ggplot(dt, aes(x = PC1, y = PC2, colour = grps)) +

geom_vline(xintercept = 0, color = "white", size = 1) +

geom_hline(yintercept = 0, color = "white", size = 1) +

geom_point(size = 6) +

scale_color_manual(values = c("green4","blue","brown1")) +

stat_ellipse(aes(x = PC1, y = PC2, fill = subgrp), data = dt, type = "norm",

geom = "polygon", level = 0.5, alpha=0.2, show.legend = FALSE) +

scale_fill_manual(values = c("green4","blue","brown1")) + p0## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

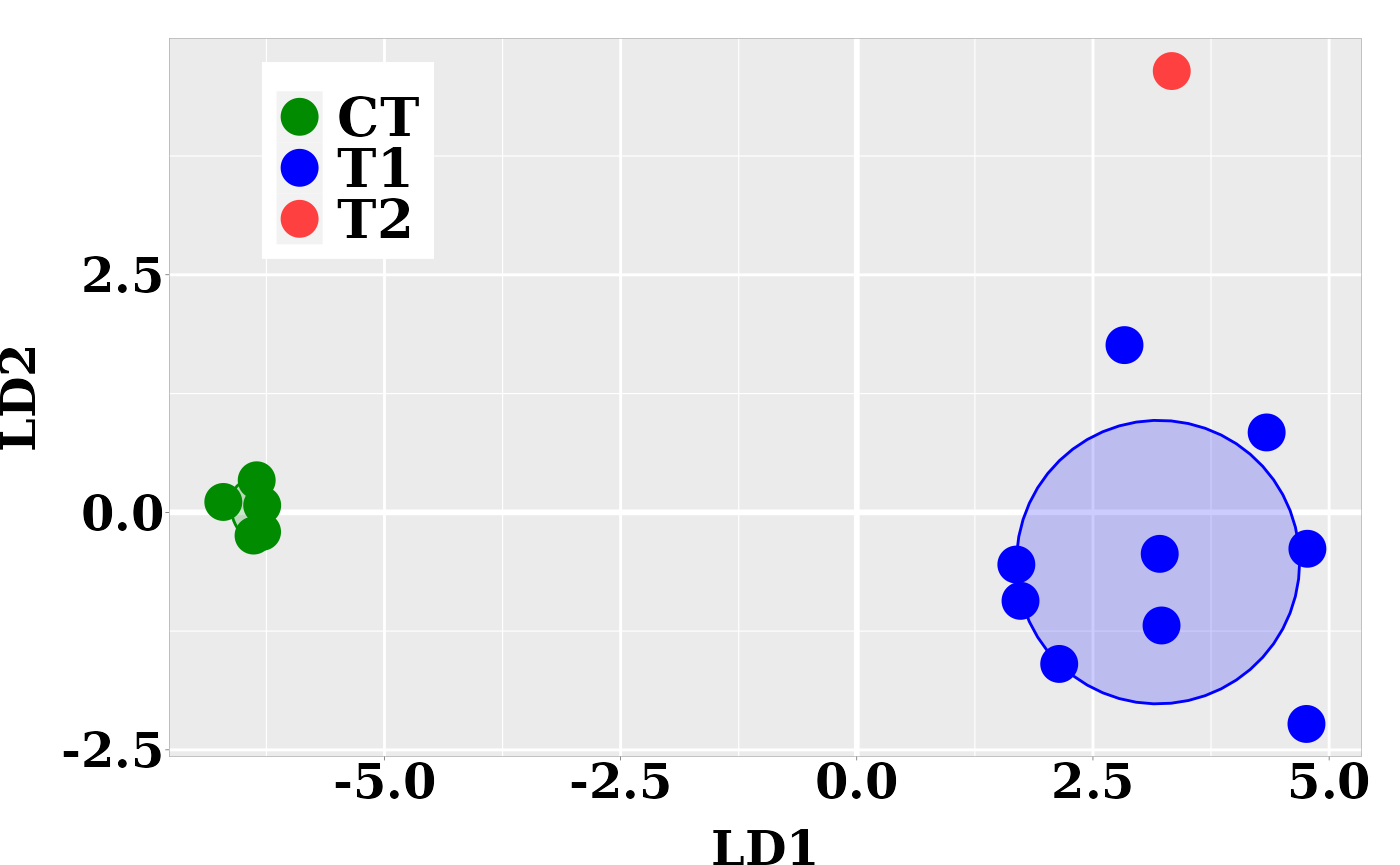

Graphic LD1 vs LD2

In the current case, better resolution is obtained with the linear discriminant functions, which is based on the three firsts PCs. Notice that the number principal components used the LDA step must be lower than the number of individuals (\(N\)) divided by 3: \(N/3\).

ind.coord <- predict(ld, newdata = data.frame(dmgm), type = "scores")

dt <- data.frame(ind.coord, subgrp = grps)

p0 <- theme(

axis.text.x = element_text( face = "bold", size = 18, color="black",

# hjust = 0.5, vjust = 0.5,

family = "serif", angle = 0,

margin = margin(1,0,1,0, unit = "pt" )),

axis.text.y = element_text( face = "bold", size = 18, color="black",

family = "serif",

margin = margin( 0,0.1,0,0, unit = "mm" )),

axis.title.x = element_text(face = "bold", family = "serif", size = 18,

color="black", vjust = 0 ),

axis.title.y = element_text(face = "bold", family = "serif", size = 18,

color="black",

margin = margin( 0,2,0,0, unit = "mm" ) ),

legend.title=element_blank(),

legend.text = element_text(size = 20, face = "bold", family = "serif"),

legend.position = c(0.15, 0.83),

panel.border = element_rect(fill=NA, colour = "black",size=0.07),

panel.grid.minor = element_line(color= "white",size = 0.2),

axis.ticks = element_line(size = 0.1), axis.ticks.length = unit(0.5, "mm"),

plot.margin = unit(c(1,1,0,0), "lines"))

ggplot(dt, aes(x = LD1, y = LD2, colour = grps)) +

geom_vline(xintercept = 0, color = "white", size = 1) +

geom_hline(yintercept = 0, color = "white", size = 1) +

geom_point(size = 6) +

scale_color_manual(values = c("green4","blue","brown1")) +

stat_ellipse(aes(x = LD1, y = LD2, fill = subgrp), data = dt, type = "norm",

geom = "polygon", level = 0.5, alpha=0.2, show.legend = FALSE) +

scale_fill_manual(values = c("green4","blue","brown1")) + p0## Too few points to calculate an ellipse

References

Session info

Here is the output of sessionInfo() on the system on

which this document was compiled running pandoc 3.1.1:

## R version 4.3.2 (2023-10-31)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Ubuntu 22.04.3 LTS

##

## Matrix products: default

## BLAS: /usr/lib/x86_64-linux-gnu/blas/libblas.so.3.10.0

## LAPACK: /usr/lib/x86_64-linux-gnu/lapack/liblapack.so.3.10.0

##

## locale:

## [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

## [5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

## [7] LC_PAPER=en_US.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

##

## time zone: America/New_York

## tzcode source: system (glibc)

##

## attached base packages:

## [1] stats4 stats graphics grDevices utils datasets methods

## [8] base

##

## other attached packages:

## [1] ape_5.7-1 ggplot2_3.4.4 MethylIT.utils_0.1.1

## [4] MethylIT_0.3.2.7 rtracklayer_1.60.1 GenomicRanges_1.52.1

## [7] GenomeInfoDb_1.36.4 IRanges_2.34.1 S4Vectors_0.38.2

## [10] BiocGenerics_0.46.0

##

## loaded via a namespace (and not attached):

## [1] rstudioapi_0.15.0 jsonlite_1.8.7

## [3] magrittr_2.0.3 modeltools_0.2-23

## [5] farver_2.1.1 rmarkdown_2.25

## [7] fs_1.6.3 BiocIO_1.10.0

## [9] zlibbioc_1.46.0 ragg_1.2.6

## [11] vctrs_0.6.5 memoise_2.0.1

## [13] Rsamtools_2.16.0 RCurl_1.98-1.13

## [15] htmltools_0.5.7 S4Arrays_1.0.6

## [17] Formula_1.2-5 pROC_1.18.5

## [19] caret_6.0-94 sass_0.4.8

## [21] parallelly_1.36.0 bslib_0.6.1

## [23] desc_1.4.3 sandwich_3.0-2

## [25] plyr_1.8.9 zoo_1.8-12

## [27] lubridate_1.9.3 cachem_1.0.8

## [29] GenomicAlignments_1.36.0 lifecycle_1.0.4

## [31] minpack.lm_1.2-4 iterators_1.0.14

## [33] pkgconfig_2.0.3 Matrix_1.6-4

## [35] R6_2.5.1 fastmap_1.1.1

## [37] GenomeInfoDbData_1.2.10 MatrixGenerics_1.12.3

## [39] future_1.33.0 digest_0.6.33

## [41] colorspace_2.1-0 AnnotationDbi_1.62.2

## [43] textshaping_0.3.7 RSQLite_2.3.3

## [45] labeling_0.4.3 randomForest_4.7-1.1

## [47] fansi_1.0.6 timechange_0.2.0

## [49] httr_1.4.7 abind_1.4-5

## [51] compiler_4.3.2 proxy_0.4-27

## [53] bit64_4.0.5 withr_2.5.2

## [55] BiocParallel_1.34.2 betareg_3.1-4

## [57] DBI_1.1.3 highr_0.10

## [59] MASS_7.3-60 lava_1.7.3

## [61] DelayedArray_0.26.7 rjson_0.2.21

## [63] ModelMetrics_1.2.2.2 tools_4.3.2

## [65] lmtest_0.9-40 future.apply_1.11.0

## [67] nnet_7.3-19 glue_1.6.2

## [69] restfulr_0.0.15 nlme_3.1-164

## [71] grid_4.3.2 reshape2_1.4.4

## [73] generics_0.1.3 recipes_1.0.8

## [75] gtable_0.3.4 class_7.3-22

## [77] data.table_1.14.8 flexmix_2.3-19

## [79] utf8_1.2.4 XVector_0.40.0

## [81] foreach_1.5.2 pillar_1.9.0

## [83] stringr_1.5.1 genefilter_1.82.1

## [85] splines_4.3.2 dplyr_1.1.4

## [87] lattice_0.22-5 survival_3.5-7

## [89] bit_4.0.5 annotate_1.78.0

## [91] tidyselect_1.2.0 Biostrings_2.68.1

## [93] knitr_1.45 SummarizedExperiment_1.30.2

## [95] xfun_0.41 Biobase_2.60.0

## [97] hardhat_1.3.0 timeDate_4022.108

## [99] matrixStats_1.2.0 proto_1.0.0

## [101] stringi_1.8.3 yaml_2.3.8

## [103] evaluate_0.23 codetools_0.2-19

## [105] tibble_3.2.1 cli_3.6.2

## [107] rpart_4.1.23 nls2_0.3-3

## [109] xtable_1.8-4 systemfonts_1.0.5

## [111] munsell_0.5.0 jquerylib_0.1.4

## [113] Rcpp_1.0.11 globals_0.16.2

## [115] png_0.1-8 XML_3.99-0.16

## [117] parallel_4.3.2 pkgdown_2.0.7

## [119] gower_1.0.1 blob_1.2.4

## [121] bitops_1.0-7 listenv_0.9.0

## [123] ipred_0.9-14 e1071_1.7-13

## [125] scales_1.3.0 prodlim_2023.08.28

## [127] purrr_1.0.2 crayon_1.5.2

## [129] rlang_1.1.2 KEGGREST_1.40.1